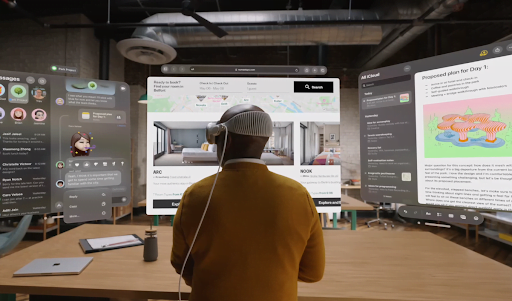

Apple’s latest foray into spatial computing, Apple Vision Pro, marks a significant leap towards redefining how we interact with technology. Spatial computing transcends traditional boundaries, allowing users to engage with both 2D and 3D content in a way that’s seamlessly integrated into the physical world.

There are two types of experiences that we can focus on:

- Rendering 2D content in space. This involves projecting existing device windows into the spatial realm, providing a familiar yet enhanced user interface.

- Interacting with 3D content. Apple Vision Pro places 3D content within a user’s environment. This content is not static; it adapts and reacts to its surroundings, offering a truly interactive experience.

Some examples of basic experiences that just use the 3D space to place windows include:

- Spatial window display with eye and pinch interactions, like a timer in the kitchen when cooking

- Portable workstation with the possibility of extending the MacBook display

Source: Screenshots from Apple Keynote WWDC 2023

Industry-specific experiences that take advantage of the third dimension

Here are some examples of experiences we can all imagine now being possible:

- Retail and shopping: Transform the way customers interact with products through 3D e-commerce shopping, virtual try-ons, and AR visualization.

- Education and collaboration: Elevate learning and collaborative work by enabling interactions with 3D models and virtual simulations.

- Tourism and travel: Offer virtual explorations of distant locales, creating immersive travel experiences through partnerships and app development.

- Gaming: Develop AR games with intuitive controls, blending digital content with the physical world for enhanced gameplay.

Key learning resources for developers

To grasp the fundamentals of spatial computing and start building apps that leverage this technology, here are some essential resources:

- WWDC23 Sessions. The conference has been pivotal in introducing spatial computing to developers. Sessions such as “Getting Started with Building Apps for Spatial Computing” and “Developing Your First Immersive App” cover everything from basic concepts to creating immersive scenes using Reality Composer Pro.

- SwiftUI for spatial computing. A specific session dedicated to using SwiftUI within visionOS demonstrates how to utilize the foundational building blocks for app development.

- visionOS fundamentals. Sean Allen provides a summary of the key concepts in a down to earth manner, providing a clear overview without having to go into heavy documentation material.

- Apple Documentation. Always a must for developers, and this is no exception. Sections like SwiftUI Docs and VisionOs Docs cover all the information needed. This ranges from basic SwiftUI concepts that must be refreshed, such as app organization and scene management, to a thorough review of everything new that has been introduced on the platform.

From concept to reality: Structuring apps in spatial computing

While developing apps for spatial computing requires to some extent a new approach to structure and design, everything is done with the SwiftUI framework, with which we are all familiar.

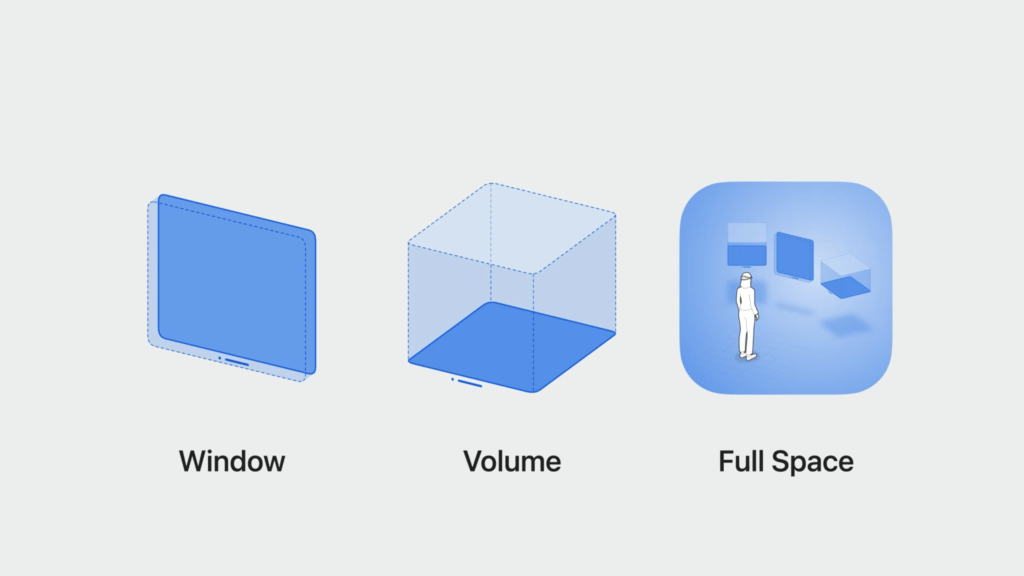

The architecture follows a logical progression from app struct to scenes, and finally, to windows, volumes, or immersive spaces. Notably, windows have evolved to support 3D content and can now adopt volumetric shapes with .windowStyle(.volumetric). Furthermore, a groundbreaking type of scene, ImmersiveSpace, allows developers to position SwiftUI views outside conventional containers.

Foundational building blocks (3 types of scenes that make a visionOS app)

Source: Get started with building apps for spatial computing, Apple

Windows: The gateway to 3D content

At the heart of visionOS are Windows, which are akin to SwiftUI Scenes on Mac, capable of housing traditional views and 3D content. Each app can feature one or more windows, offering a versatile platform for developers to present their content. The innovation doesn’t stop at traditional 2D views; windows in visionOS support 3D content, extending the canvas for creators to paint their visions.

Volumes: Elevating 3D content

Volumes, introduced through WindowGroup + .windowStyle(.volumetric), represent another type of scene specifically designed for showcasing 3D content. Unlike windows, volumes are optimized for 3D, making them ideal for presenting complex models like a heart or the Earth. This distinction allows developers to choose the right container for their content, ensuring the best possible user experience.

Spaces: The final frontier of immersion

Spaces in visionOS introduce a new dimension of app interaction. With ImmersiveSpace and .immersionStyle(selection:in:), developers can create shared or full spaces, defining how their apps integrate with the user’s environment. Shared Spaces mimic a desktop environment where apps coexist, while Full Spaces offer a more controlled, immersive experience, potentially leveraging ARKit APIs for enhanced reality.

If you want to make the most out of SwiftUI, ARKit and RealityKit, we encourage you to use ImmersiveSpace together with the new RealityView, since they were designed to be used together.

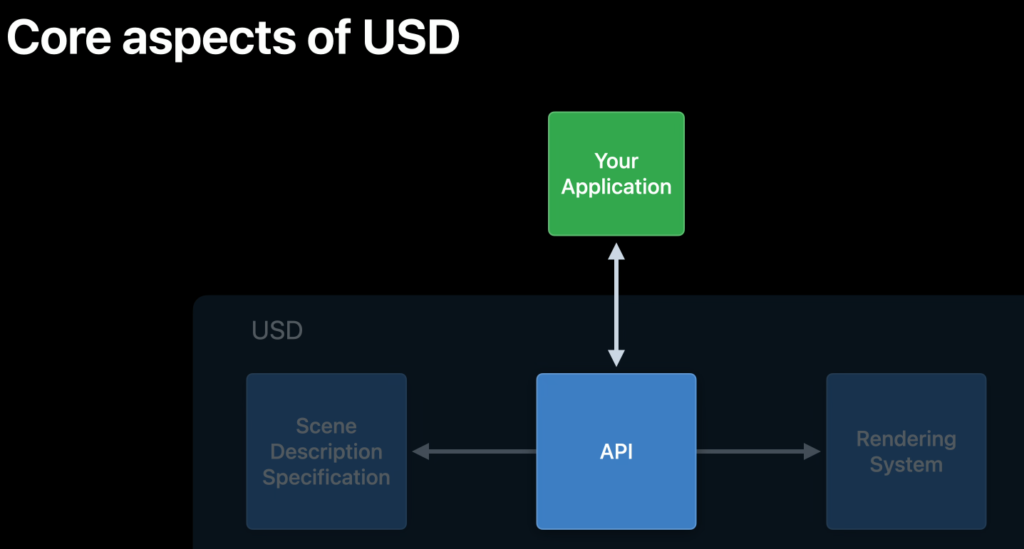

The Core of Apple Vision Pro: USDZ and Reality Composer Pro

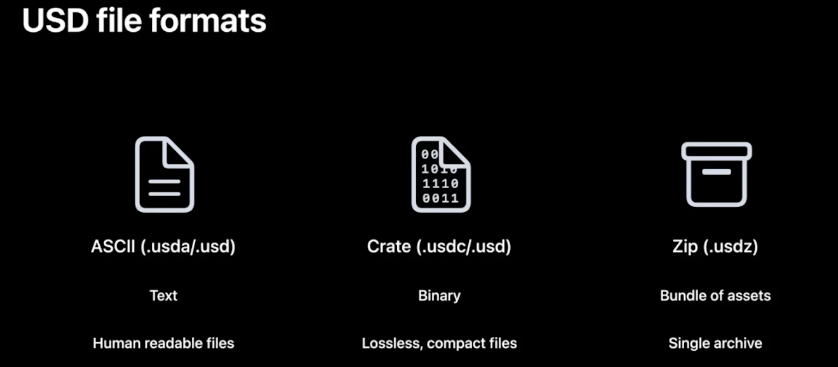

USDZ files

USDZ files, co-developed by Apple and Pixar, serve as the cornerstone for placing 3D objects in augmented reality environments.

Image sources: Understand USD fundamentals, Apple

We can get these files from Reality Composer Pro – which is a pivotal tool, offering a graphical interface for composing, editing, and previewing 3D content directly within Xcode, enhancing the creation process with features like particle emitters and audio authoring.

To dive into the USD ecosystem and Reality Composer Pro, we recommend reviewing these sessions from WWDC22 and WWDC23 which delve into the intricacies of the USD ecosystem, providing insights into tools, rendering techniques, and the broader landscape of USDZ files. These resources are invaluable for developers looking to master spatial computing with Apple Vision Pro:

- Understand USD fundamentals – WWD22

- Explore USD tools and rendering – WWDC22

- Explore the USD ecosystem – WWDC23

- Meet Reality Composer Pro – WWDC23

- Work with Reality Composer Pro content in Xcode – WWDC23

Enhancing Interactivity with RealityKit and ARKit

Interactions in spatial computing go beyond traditional input methods, incorporating taps, drags, and hand tracking. RealityKit and ARKit form the backbone of this interactive framework, allowing for the augmentation of app windows with 3D content and the creation of fully immersive environments.

RealityKit: A closer look

RealityKit simplifies the integration of AR features, supporting accurate lighting, shadows, and animations. It operates on the entity-component-system (ECS) model, where entities are augmented with components to achieve desired behaviors and appearances. Custom components and built-in animations enrich the spatial experience, further bridged to SwiftUI through RealityView attachments.

Here is a code snippet of the RealityView structure, with some comments explaining the purpose of each code block.

RealityView { content, attachments in

// load entities and add attachments to the root entity

} update: { content, attachments in

// update your RealityKit entities when the view rerenders

} attachments: {

// to add SwiftUI views with the tag property that allows the RealityView to translate the SwiftUI views into entities

}ARKit: Bridging the real and virtual worlds

ARKitSession, DataProvider, and Anchor concepts introduce a structured approach to building AR experiences. Features like world tracking, scene geometry, and image tracking enable precise placement of virtual content in the real world, supported by comprehensive sessions and tutorials tailored for spatial computing.

Tools for development: From ObjectCapture to RoomPlan

The ObjectCapture API and RoomPlan API stand out as tools for generating 3D models from real objects and spaces. These APIs facilitate the creation of highly realistic and context-aware applications, extending the capabilities of developers to include custom object and room scanning functionalities in their apps.

Quick Look and SharePlay: Enhancing the user experience

Quick Look offers volumetric windowed previews for spatial computing, while SharePlay is designed for syncing experiences across devices. These features underscore the platform’s commitment to collaborative and interactive applications, supported by detailed WWDC sessions on building spatial SharePlay experiences.

Compatibility and considerations for existing apps

visionOS supports a broad spectrum of existing apps, offering pathways for automatic support and full native experiences. We encourage developers to explore sessions and documentation on elevating their apps for spatial computing, addressing compatibility issues, and harnessing the full potential of visionOS functionalities.

There are two flavors of compatibility: automatic support, and full native experience. With the first you’ll get the same experience you have on iPhone/iPad, but with the second you’ll be able to tap into the core visionOS functionalities. In both cases there are some considerations to have, since there are some platform differences that can generate a poor UX if not handled.

When adding the visionPro device as a supported device you can choose between two options. Adopt the new xrOS SDK in visionPro depending on your requirements

Our recommendations for refining your app for spatial computing on visionOS

Adapting apps for this platform requires a thoughtful approach to interactivity, compatibility, and user engagement. Here are key considerations to polish your apps for visionOS:

Enhancing interactivity

- Tappability and hover effects. Ensuring content is easily tappable is crucial. visionOS introduces a hoverStyle property for UIViews, allowing developers to add hover effects to non-interactable components, enriching the user interface and signaling interactive elements.

- Gesture inputs. The platform limits to two simultaneous gesture inputs, corresponding to the natural human capability of one touch per hand. Apps that rely on multi-touch gestures beyond this limitation will require redesigning to fit the platform’s interaction model.

API compatibility and user experience

- API functionality. While targeting the iOS SDK, some APIs may not function as expected on visionOS due to differences in hardware configurations or system capabilities. It’s essential to verify the availability of frameworks and adapt to the supported hardware features.

- Prompt presentation. Unlike the modal presentation of prompts on iPhone and iPad, visionOS prompts do not block further interactions. This behavior necessitates adjustments in app design to accommodate non-modal prompts and ensure user flows remain intuitive.

- Location services. Location determination on visionOS mirrors the iPad experience, relying on Wi-Fi or iPhone sharing. This approach demands consideration in apps that utilize location data, directing developers to explore Core Location capabilities specific to spatial computing.

Adaptive UI and search functionality

- Screen orientation. visionOS dismisses the concept of screen rotation. Developers should specify preferred orientations for new scenes via UIPreferredDefaultInterfaceOrientation in the info.plist, guiding the user experience in spatial environments. This is done in order to tell the system in which orientation the new scenes should be presented (but is only for iOS adapted apps since the ones made in visionOS SDK dismiss this concept entirely).

- Dictation in search. The platform introduces a ‘Look to dictate’ feature, transforming the search bar experience by replacing the magnifying glass icon with a microphone for dictation. This advancement requires enabling the .searchDictationBehavior modifier for searchable components, aligning with visionOS’s innovative interaction paradigms. Again, this is only needed in iOS adapted apps, since it’s the default behavior in apps targeting the visionOS SDK.

Conclusion: Apple Vision Pro offers a world of new, immersive experiences

The transition into this new dimension of computing is not without its challenges, including adapting existing apps and mastering new development tools. However, the potential for creating engaging, immersive experiences that seamlessly blend the digital with the physical is unparalleled. In addition, this transition is aided significantly by the fact that everything is done with SwiftUI, meaning developers don’t need to learn a whole new language or framework – rather just the APIs and patterns related to this platform. I hope this article helps in your journey. Below you’ll find additional useful resources.

Additional useful resources

Development resources

-

Apple’s example apps

-

Hello World – Shows how to transition between different visual modes with the 3D glob

-

Happy Beam – Shows how to create a game that leverages an immersive space including custom hand gestures

-

Destination Video – Shows how to build a shared immersive playback experience that incorporates 3D video and spatial audio

-

Design resources